PySpark Training in Hyderabad

DP-600 Certification | Classroom & Online Training | Real-Time Projects | 2.5-Month Course | Flexible EMI | Free Demo

Get PySpark Training in Hyderabad, designed to make you industry-ready through real-time big data processing projects, 40+ hours of hands-on Spark practice, and structured training on Apache Spark with Python for large-scale data engineering and analytics roles.

PySpark Training in Hyderabad with real-world use cases and practical implementation.

Table of Contents

TogglePySpark Training in Hyderabad

Batch Details

| Trainer Name | Mr. Manoj (Certified Trainer) Mr. Sree Ram (Certified Trainer) |

| Trainer Experience | 20+ Years |

| Next Batch Date | 2nd FEB 2026 (05:00 PM IST) (Online) 2nd FEB2026 (11:00 AM IST) (Offline) |

| Training Modes | Online and Offline Training (Instructor Led) |

| Course Duration | 2 .5 Months (Offline & Online) |

| Call us at | +91 9000368793 |

| Email Us at | fabricexperts.in@gmail.com |

| Demo Class Details | ENROLL FOR FREE DEMO SESSION |

PySpark Training in Hyderabad – Course Curriculum

- Data engineering concepts and lifecycle

- Structured vs unstructured data

- OLTP vs OLAP systems

- Batch vs streaming processing

- Data pipelines overview

- ETL vs ELT approaches

- Data quality fundamentals

- Modern data stack overview

- Cloud data platforms basics

- Real-world data engineering use cases

- What is Apache Spark

- What is PySpark

- Spark architecture explained

- Driver and executor roles

- RDDs vs DataFrames vs Datasets

- PySpark execution model

- Lazy evaluation concept

- Spark cluster modes

- Spark ecosystem overview

- Spark & PySpark use cases

- PySpark installation and setup

- Spark local vs cluster mode

- Working with SparkSession

- PySpark project structure

- Understanding Spark UI

- PySpark configurations

- Working with notebooks

- Handling dependencies

- Logging and debugging basics

- Introduction to PySpark DataFrames

- Creating DataFrames in PySpark

- Reading and writing data (CSV, JSON, Parquet)

- Schema inference and enforcement

- Transformations and actions

- PySpark SQL queries

- Joins and aggregations

- Window functions

- Optimization techniques

- Hands-on PySpark SQL practice

- Spark SQL architecture

- Catalyst optimizer overview

- Tungsten execution engine

- Query execution plans

- Caching and persistence

- Partitioning strategies

- Broadcast joins

- Shuffle optimization

- Memory management basics

- Performance tuning use cases

- Data ingestion strategies

- Batch ingestion using PySpark

- Streaming ingestion overview

- Handling large datasets

- Incremental data loading

- Error handling techniques

- Data validation checks

- ETL pipeline design

- Best practices

- End-to-end ETL pipeline demo

- Streaming fundamentals

- Structured streaming concepts

- Event-time vs processing-time

- Windowing and watermarking

- Stream sources and sinks

- Fault tolerance concepts

- Checkpointing

- Streaming performance tuning

- Real-time analytics workflows

- Industry streaming use cases

- PySpark with cloud storage

- Working with Data Lakes

- Reading data from cloud sources

- Security and access basics

- Cost-efficient data processing

- Large-scale data handling

- Schema evolution concepts

- Lakehouse basics

- Cloud analytics workflows

- Enterprise use cases

- Dimensional modeling basics

- Star and snowflake schemas

- Analytics-ready datasets

- Data transformation strategies

- Aggregation layers

- Query optimization

- Reporting datasets preparation

- BI tool integration concepts

- Business analytics use cases

- Analytics best practices

- PySpark performance tuning

- Partitioning and bucketing

- Memory optimization

- Job monitoring techniques

- Debugging failed Spark jobs

- Resource utilization tracking

- Cost optimization methods

- Production tuning tips

- Best practices

- Real-world optimization examples

- End-to-end PySpark project

- Batch + streaming pipelines

- Data ingestion to analytics flow

- Production-style architecture

- Version control basics

- Deployment concepts

- Error handling strategies

- Performance tuning

- Project documentation

- Industry-style datasets

- PySpark job roles overview

- Real-world project discussion

- Resume building guidance

- Interview-focused PySpark questions

- Coding interview practice

- Architecture discussion rounds

- SQL & Spark interview prep

- Career roadmap

- Industry expectations

- Job application strategies

Why Choose PySpark Training in Hyderabad

- Learn PySpark with Apache Spark for large-scale data processing.

- Hands-on training with real-time data engineering tasks

- Beginner-friendly teaching with step-by-step Python-based learning.

- Industry use cases explained in a simple and practical way

- Build end-to-end data pipelines using PySpark

- Trainers with real-world Spark & PySpark project experience

- Practical labs instead of theory-only sessions

- Covers Spark Core, PySpark SQL, and Streaming workflows

- Classroom training available in Hyderabad

- Online live instructor-led sessions are offered

- Resume-ready projects and portfolios

- Interview preparation and career guidance

- Certification support for career growth

- Job-focused skills aligned with current market demand

Pysaprk Training in Hyderabad

Benefits of PySpark Training in Hyderabad

Our PySpark Training in Hyderabad is delivered by experienced data engineering professionals who guide you through Apache Spark, PySpark programming, distributed data processing, ETL pipelines, and performance optimization. You will work on real datasets, build industry-style projects, and gain job-ready big data engineering skills aligned with modern analytics and data engineering roles.

- Learn from Certified Industry Experts

Train under Spark and big data professionals with strong real-world experience in large-scale data engineering and analytics projects.

- Hands-On Experience with PySpark & Spark

Work directly with PySpark, Spark SQL, DataFrames, RDDs, and Structured Streaming to build real business data solutions.

- Project-Based, Practical Learning

Develop end-to-end PySpark pipelines, perform transformations, and generate insights using real-time, industry-style workflows.

- Core PySpark & Spark Architecture Skills

Learn how PySpark works with Spark architecture to process distributed data efficiently at scale.

- Complete Data Engineering Skill Development

Gain skills in data ingestion, transformation, processing, and analytics using PySpark for batch and streaming workloads.

- Master Spark Data Processing Concepts

Understand Spark execution model, lazy evaluation, partitioning, and memory management for optimized processing.

- Spark Performance Optimization

Learn performance tuning, job optimization, and cost-efficient processing techniques used in large-scale data systems.

- Real-Time & Batch Data Processing

Work with both batch and streaming pipelines using PySpark Structured Streaming for real-world enterprise use cases.

- Flexible Training Options

Choose classroom training in Hyderabad or live online sessions with weekday and weekend batch options.

- Career-Focused & Job-Oriented Program

Training is designed to match real PySpark and Spark Data Engineer job roles in the current market.

- Updated Industry-Aligned Curriculum

Curriculum follows current Apache Spark, PySpark, and big data industry standards and best practices.

- Career Community & Expert Mentorship

Get ongoing mentorship, peer learning, and expert guidance from Spark professionals and trainers.

- Resume & Interview Preparation Support

Receive support for resume building, LinkedIn optimization, and PySpark interview preparation.

- Unlimited Batch Access

Get one-year access to PySpark training batches to revise concepts anytime.

- Strong Career Opportunities in Hyderabad

Prepare confidently for roles such as PySpark Data Engineer, Big Data Engineer, Spark Developer, Analytics Engineer, and Data Platform Engineer.

What is PySpark Training in Hyderabad?

PySpark Training in Hyderabad teaches how to build scalable, high-performance data pipelines using Apache Spark with Python (PySpark) for real-world data engineering and analytics solutions.

This training explains how PySpark works with Apache Spark’s distributed architecture to handle large-scale data engineering, analytics, and batch/streaming workloads efficiently.

Learn how PySpark integrates with data lakes and cloud storage systems to store, manage, and process enterprise data in a centralized and scalable manner.

Data Ingestion & ETL with PySpark

Understand how PySpark pipelines ingest, transform, and load data from multiple sources using automated and scalable ETL workflows.

Gain hands-on experience building large-scale data pipelines using PySpark DataFrames, Spark SQL, and distributed processing for both structured and unstructured data.

Spark Architecture & Data Processing

This course covers Spark execution model, lazy evaluation, partitioning, and optimization techniques, helping you manage performance, reliability, and scalability in real enterprise environments.

Learn how PySpark supports data preparation and feature engineering using Python and Spark, enabling smooth integration with machine learning workflows.

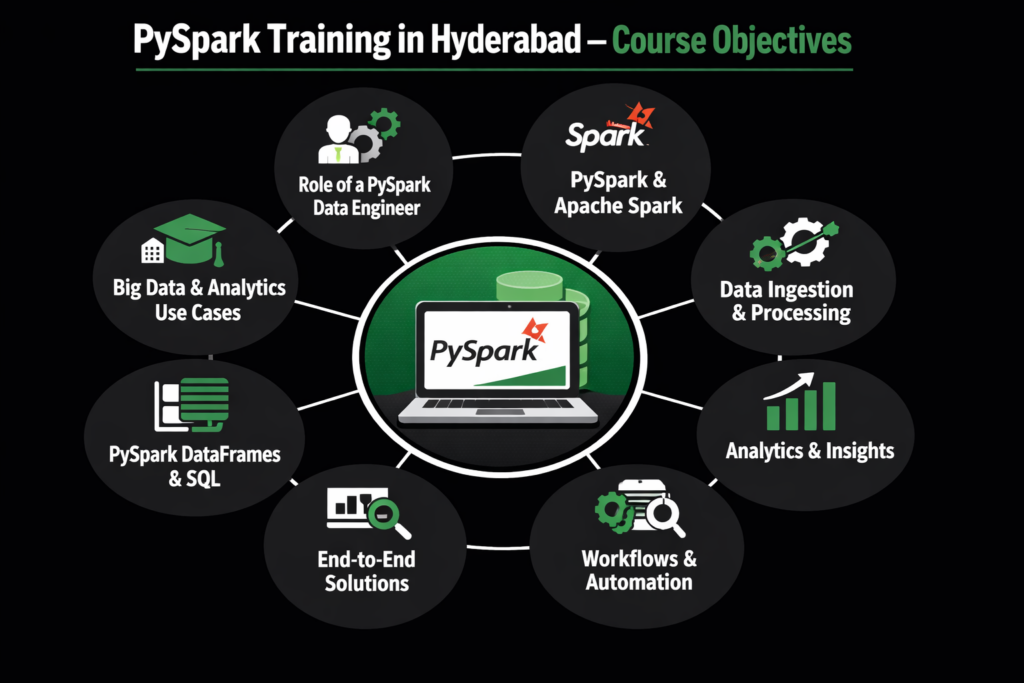

PySpark Training in Hyderabad – Course Objectives

The PySpark Training in Hyderabad is designed to help learners master modern data engineering and big data analytics by using Apache Spark with Python (PySpark) as a scalable, enterprise-grade data processing platform.

This training enables students to understand how PySpark works on top of Apache Spark to simplify large-scale data processing, analytics, and real-time data workflows. Learners will build end-to-end data pipelines, automate Spark jobs, process massive datasets, and create analytics-ready outputs for business reporting and insights.

- Understand the role of a PySpark Data Engineer in modern data platforms.

- Learn how PySpark works with Apache Spark for distributed data processing.

- Master data ingestion, transformation, and processing using PySpark

- Gain hands-on experience with PySpark DataFrames, Spark SQL, and Spark environments.

- Implement end-to-end data engineering and analytics solutions using PySpark

- Build optimized, analytics-ready datasets for reporting and BI tools

- Understand PySpark workflows, jobs, and automation processes.

- Analyze data insights and business trends using Spark-based analytics workflows.

- Enhance knowledge of big data engineering and enterprise analytics use cases.

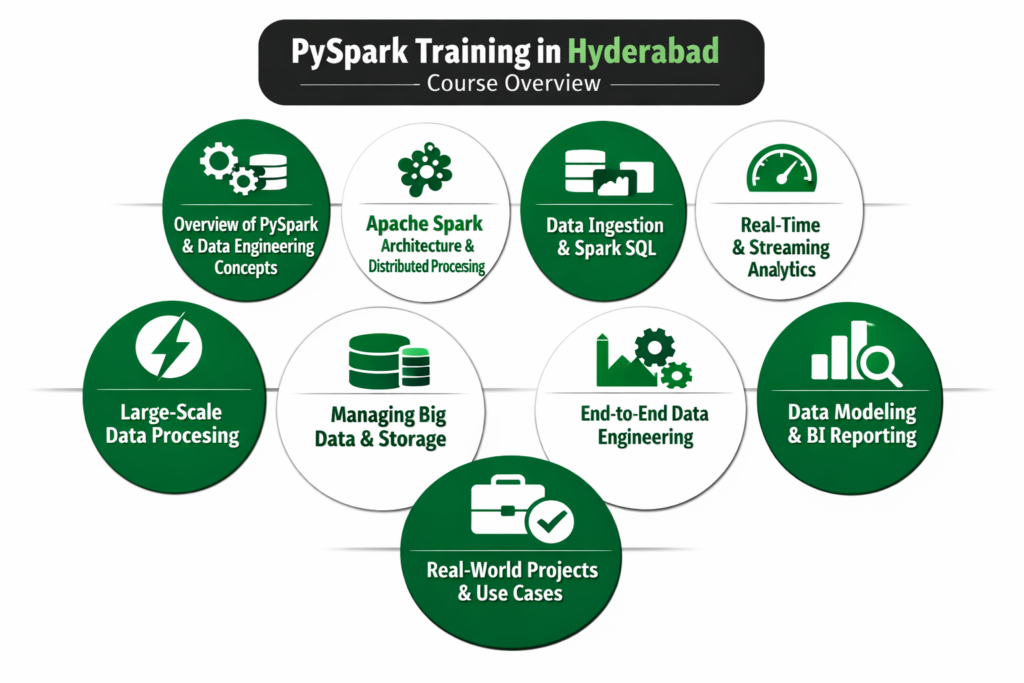

PySpark Training in Hyderabad – Course Overview

The PySpark Training in Hyderabad course is designed to help learners build modern, scalable data engineering solutions using Apache Spark with Python (PySpark). This training emphasizes hands-on PySpark pipelines, distributed data processing, real-time analytics, and performance optimization used in real-world big data environments.

What You Will Learn

- Overview of PySpark and modern data engineering concepts

- Understanding Apache Spark architecture and distributed processing

- Building scalable data pipelines using PySpark and Spark

- Building scalable data pipelines using PySpark and Spark

- Managing large datasets, partitions, and optimized storage formats

- Large-scale data processing using Apache Spark with PySpark

- Real-time and streaming analytics using PySpark Structured Streaming

- End-to-end data engineering workflows using PySpark

- Industry-aligned projects and real-world PySpark use cases

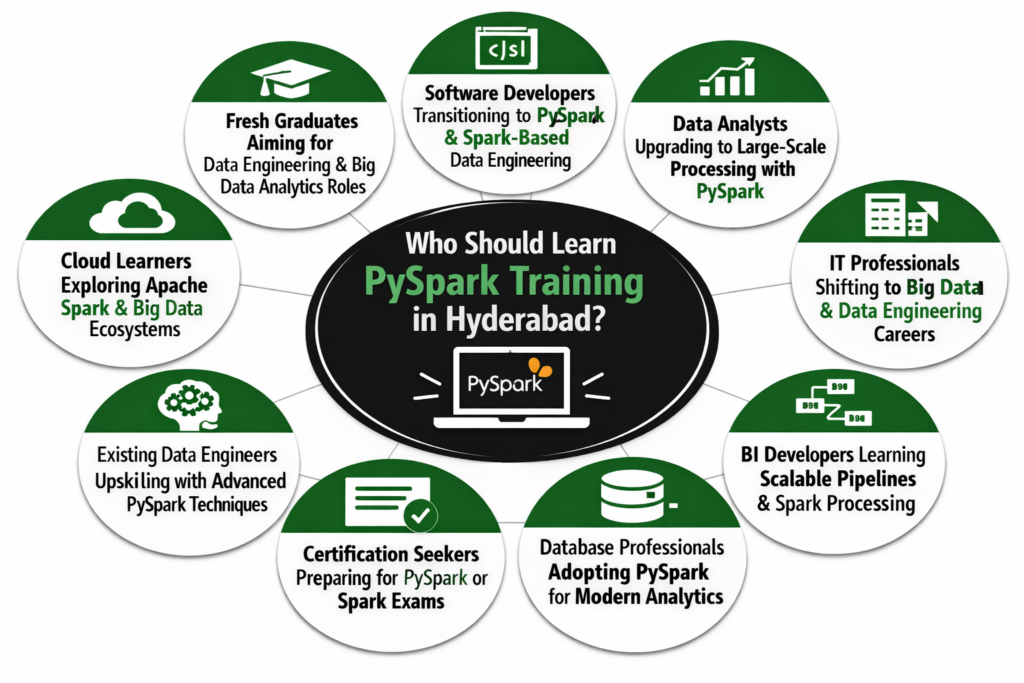

Who Should Learn PySpark Training in Hyderabad?

The PySpark Training in Hyderabad is ideal for anyone looking to build or grow a career in big data engineering and analytics. This course is suitable for beginners, working professionals, and career switchers who want hands-on experience with Apache Spark and PySpark for large-scale data processing.

- Fresh graduates aiming for data engineering and big data analytics roles

- Software developers transitioning into PySpark and Spark-based data engineering.

- Data analysts upgrading to large-scale data processing with PySpark.

- IT professionals planning a shift to big data and data engineering careers.

- BI developers learning scalable data pipelines and Spark processing.

- Database professionals are adopting PySpark for modern analytics workflows.

- Professionals preparing for PySpark or Spark-related certifications.

- Cloud learners exploring Apache Spark and big data ecosystems.

- Tech professionals seeking high-demand PySpark and big data engineering job roles

Pyspark Training in Hyderabad -Modes

- Certified Trainers with 20+ Years of Experience

- One-on-One Project Mentorship

- Live projects Exposure

- Job guarentee Program

- 100% Placement Guarentee*

- Mock Interviews

- Free & Paid Internships

- Get support till you are placed

- Unlimited Class/Batch Access for 1 Year

- Experienced Trainers with 15+ Years in the Field

- Daily recorded videos with life time access

- Live projects on your own domain with trainer support

- 100% Job Placement Assurance for Participants

- Mock Interviews and Resume Support

- Multiple Batches Access for 1 Year

- Lifetime Access to All Course Videos

- Comprehensive Coverage from Basic to Advanced Levels

- 90+ High-Quality Recorded Videos

- Practical Examples and Case Studies Included

- Regular Updates with New Videos

PySpark Training in Hyderabad – Pre-requisites

The PySpark Training in Hyderabad is designed to be beginner-friendly. No prior experience with PySpark or Apache Spark is required. However, having a few basic skills will help you understand big data and data engineering concepts faster. All topics are explained clearly from the fundamentals.

- Basic understanding of data concepts such as rows, columns, and tables

- Familiarity with SQL fundamentals and simple queries

- Basic knowledge of Python programming (helpful, not mandatory)

- General awareness of big data or cloud computing concepts

- Basic knowledge of databases or data analytics is an advantage.

- Logical thinking and problem-solving mindset

PySpark Training in Hyderabad – Career Opportunities

PySpark Training in Hyderabad opens the door to high-demand careers in big data engineering and analytics. By mastering Apache Spark with PySpark, large-scale data processing, and real-time analytics, professionals gain skills that are highly valued across industries.

After completing this course, learners are prepared for PySpark and Spark-based data engineering roles in Hyderabad and across India. As organizations increasingly adopt Apache Spark for large-scale analytics, the demand for skilled professionals who can design, optimize, and manage distributed data platforms continues to grow rapidly.

PySpark Data Engineer

Design and build scalable data pipelines using PySpark, Spark SQL, DataFrames, and distributed processing frameworks.

Big Data Engineer

Develop and maintain large-scale data processing systems using Apache Spark, PySpark, and data lake architectures.

Create end-to-end analytics solutions using PySpark to deliver clean, analytics-ready datasets for reporting and insights.

Real-Time Data Engineer

Work with streaming pipelines, event-driven data, and real-time analytics using PySpark Structured Streaming.

Business Intelligence Developer

Build analytics datasets and reporting layers consumed by BI tools using PySpark-processed data.

Data Platform Engineer

Manage, optimize, and govern enterprise data platforms powered by Apache Spark and PySpark environments.

PySpark Training in Hyderabad - Tools Covered

PySpark Training in Hyderabad – Training Comparison

Category | Traditional Training | PySpark Training in Hyderabad |

Teaching Style | Mostly theory-based sessions | Hands-on, project-driven PySpark workflows |

Trainer Expertise | General IT trainers | Experienced Spark & PySpark professionals |

Tools Covered | Limited data concepts | PySpark, Apache Spark, Spark SQL, Structured Streaming |

Practical Exposure | Minimal hands-on practice | Real-time PySpark lab sessions |

Projects | Basic or outdated tasks | Industry-level PySpark projects with a capstone |

Certification Support | Basic exam orientation | PySpark & Spark interview and certification guidance |

Placement Assistance | Limited or no support | Strong placement guidance and career support |

Learning Flexibility | Fixed classroom schedules | Online & classroom with weekday/weekend options |

Learning Resources | PDFs and static notes | Lifetime video access & updated hands-on labs |

PySpark Training in Hyderabad Trainers

INSTRUCTOR

15+ Years Experience

- Mr. Manoj is a seasoned Data & Analytics professional with 15+ years of industry experience, specializing in modern data engineering, cloud analytics, and enterprise-scale BI solutions. Having worked with leading global organizations, he has mastered the art of architecting and optimizing data ecosystems using Microsoft Azure, Power BI, and now Microsoft Fabric.

- His deep expertise in end-to-end data architectures, real-time analytics, and AI-driven insights makes him one of the most trusted Microsoft Fabric trainers in the industry today.

- At Fabric Masters, we proudly believe that Mr. Praveen K is one of the best Microsoft Fabric trainers in Hyderabad today, delivering exceptional value through real-time projects, deep conceptual clarity, and industry-relevant guidance.

INSTRUCTOR

About the tutor:

- Mr. Sree Ramis a highly experienced Microsoft Fabric specialist with over 25 years in the data engineering and analytics domain. He has worked with top enterprises to design, build, and optimize end-to-end data solutions using Azure, Power BI, and the unified Microsoft Fabric platform.

- With deep expertise in modern data architectures, cloud analytics, real-time processing, and enterprise BI, he has played a key role in helping organizations transform raw data into powerful business insights. His contributions include implementing scalable data pipelines, managing Lakehouse and Warehouse solutions, and ensuring seamless data governance across large enterprises.

PySpark Training in Hyderabad – Certifications You Will Achieve

Certifications

- Apache Spark Certification (Guidance) – Validates core Apache Spark and PySpark data processing skills

- PySpark Developer Certification (Guidance) – Proves hands-on expertise in PySpark DataFrames, Spark SQL, and ETL pipelines.

- Big Data Engineering Certification (Guidance) – Certifies skills in large-scale data processing, analytics, and pipeline design using Spark

- Python for Data Engineering Certification (Guidance) – Builds strong fundamentals in Python programming for data engineering workflows.

- Spark Structured Streaming Certification (Guidance) – Supports validation of real-time and streaming data processing skills using PySpark.

Apache Spark & Big Data Certification Exam Details (2026)

Certification Name | Exam Code | Exam Fee (USD / Approx. INR) | Duration | Passing Score |

Apache Spark Developer Certification (Guidance) | Spark-Dev | $165 (₹13,500 – ₹15,000) | 120 minutes | Vendor-defined |

PySpark Data Engineer Certification (Guidance) | PySpark-DE | $165 (₹13,500 – ₹15,000) | 120 minutes | Vendor-defined |

Big Data Engineering Certification (Guidance) | BDE-101 | $150 (₹12,000 – ₹14,000) | 120 minutes | Vendor-defined |

Python for Data Engineering Certification | Pyth-DE | $99 (₹8,000 – ₹9,000) | 90 minutes | Vendor-defined |

Spark Structured Streaming Certification (Guidance) | Spark-SS | $165 (₹13,500 – ₹15,000) | 120 minutes | Vendor-defined |

Top Companies Hiring PySpark Training in Hyderabad

PySpark Training in Hyderabad

Job roles and responsibilities

PySpark with Apache Spark brings together large-scale data processing, ETL pipelines, analytics, and real-time workloads. Professionals design end-to-end data engineering solutions using PySpark, Spark SQL, and Spark’s distributed execution environment.

PySpark professionals work with data lakes and cloud storage systems to access, manage, and process enterprise data efficiently across analytics and reporting workloads.

PySpark Data Engineering – Scalable Processing

PySpark data engineers ingest, transform, and process large datasets using PySpark DataFrames, Spark SQL, automated jobs, and distributed processing techniques.

PySpark-based architectures support high-volume analytics and SQL workloads, enabling fast querying and scalable analytics for enterprise data platforms.

Analytics & Real-Time Data Processing

PySpark-processed data supports batch analytics, reporting, and real-time data processing through structured streaming and event-driven pipelines.

Security, Governance & Reliability

PySpark environments follow enterprise data security practices, including access control, data validation, monitoring, and governance to ensure compliance and reliable data operations.

PySpark Training in Hyderabad for All Experience Levels

Experience Level | Salary Range (₹/Year) | Who It’s For | What You Will Learn | Career Outcomes |

Beginner (0–1 Year) | ₹3.5 L – ₹6 L | Freshers, non-IT graduates | PySpark basics, data concepts, Spark fundamentals, SQL, Python | Junior Data Engineer, Data Analyst Trainee |

Junior (1–3 Years) | ₹6 L – ₹9 L | IT professionals, data analysts | PySpark ETL pipelines, Spark SQL, DataFrames, batch processing | PySpark Data Engineer, BI Developer |

Mid-Level (3–5 Years) | ₹9 L – ₹14 L | Working data engineers | Advanced PySpark pipelines, performance tuning, streaming analytics | Senior Data Engineer, Analytics Engineer |

Senior (5+ Years) | ₹14 L – ₹22 L+ | Architects, technical leads | Spark architecture design, optimization, and scalable big data solutions | Lead Data Engineer, Data Platform Architect |

Career Switchers | ₹5 L – ₹10 L | Developers, testers, DBAs | End-to-end PySpark workflows with real-time and batch projects | PySpark Engineer, Big Data Engineer |

Skills Developed After PySpark Training in Hyderabad

Strengthen your ability to analyze large-scale datasets using PySpark, Spark SQL, and distributed processing to uncover patterns and deliver actionable insights.

Learn to work effectively with data engineers, analysts, BI developers, and cloud teams to deliver PySpark-based data engineering and analytics solutions.

Develop precision in data transformations, pipeline monitoring, and data quality checks across PySpark and Spark environments.

Build the ability to quickly adopt new Spark features and PySpark enhancements while integrating data from multiple enterprise data sources.

Improve efficiency by organizing PySpark jobs, managing batch schedules, and handling streaming workflows to deliver analytics outputs on time.

Gain hands-on experience debugging PySpark jobs, resolving performance issues, and optimizing Spark workloads in real-world scenarios.

Enhance decision-making skills by evaluating data quality, pipeline design, and performance trade-offs within PySpark-based architectures.

Learn to clearly communicate insights using data summaries, technical documentation, and stakeholder-ready reports generated from PySpark-processed data.

Where Is PySpark Training in Hyderabad Used?

Industry / Domain | How PySpark Is Used |

IT & Software Services | Build scalable PySpark data pipelines, big data platforms, and analytics systems |

Banking & Financial Services | Risk analysis, fraud detection, and large-scale financial data processing |

Healthcare | Patient data analytics, operational insights, and compliance reporting using Spark |

Retail & E-Commerce | Sales analytics, demand forecasting, and customer behavior analysis |

Telecom | Network monitoring, streaming analytics, and real-time data processing |

Manufacturing | Supply chain analytics and predictive maintenance using PySpark |

Media & Entertainment | Streaming analytics, content performance tracking, and audience insights |

Logistics & Transportation | Route optimization, demand forecasting, and operational analytics |

Education | Learning analytics, student performance dashboards, and reporting |

Government & Public Sector | Policy analysis, governance reporting, and large-scale data insights |

Student Testimonials – PySpark Training in Hyderabad

FAQs – PySpark Training in Hyderabad

1. What is PySpark Training?

It is a modern big data engineering program that uses Apache Spark with Python (PySpark) to build scalable data pipelines, analytics solutions, and real-time processing systems.

2. Who should learn PySpark?

This course is ideal for freshers, data analysts, software developers, IT professionals, and career switchers aiming for big data and data engineering roles.

3. Do I need coding experience to join this course?

Basic Python or SQL knowledge is helpful but not mandatory. The course starts from fundamentals and gradually introduces PySpark and Spark concepts step by step.

4. What tools are covered in this training?

You will work with PySpark, Apache Spark, Spark SQL, DataFrames, Structured Streaming, and big data processing tools.

5. Is PySpark used in real companies?

Yes, many enterprises use Apache Spark and PySpark for large-scale data engineering, analytics, and real-time processing workloads.

6. What kind of projects will I work on?

You will build end-to-end PySpark data pipelines, process real datasets, implement ETL workflows, and handle batch and streaming data similar to industry use cases.

7. Does the course cover real-time analytics?

Yes, the training introduces PySpark Structured Streaming and real-time analytics concepts used in enterprise environments.

8. Will I learn BI or reporting concepts as part of this course?

?

Yes, you will learn how PySpark-processed data is prepared for analytics and consumed by BI and reporting tools.

9. Is this course suitable for fresh graduates?

Yes, the course is beginner-friendly and explains PySpark and data engineering concepts clearly from the basics.

10. Can working professionals take this course?

Yes, the training offers flexible schedules with hands-on labs, making it suitable for working professionals and upskilling needs.

11. What certifications does this course prepare me for?

The course supports PySpark, Apache Spark, Big Data, and Python-for-Data-Engineering certification paths through guided preparation.

12. How is PySpark different from traditional data tools?

PySpark focuses on distributed, in-memory processing using Apache Spark, enabling faster and more scalable analytics than traditional tools.

13. Is PySpark Data Engineering a good career in Hyderabad?

Yes, Hyderabad has a strong demand for PySpark and Spark professionals due to growing big data and cloud adoption.

14. Does the course include placement support?

Yes, placement support includes resume building, interview preparation, LinkedIn optimization, and career guidance.

15. What industries use PySpark?

Industries such as IT, banking, healthcare, retail, telecom, SaaS, manufacturing, logistics, and government use PySpark extensively.

16. Will I learn data security and governance concepts?

Yes, the course covers data quality, access control basics, monitoring, and governance best practices in Spark environments.

17. Can I switch careers into data engineering after this course?

Yes, many learners successfully transition from testing, development, or analytics roles into PySpark data engineering roles.

18. Is PySpark cloud-based?

Yes, PySpark runs on cloud and on-premise Spark clusters, making it highly scalable and flexible.

19. How long does it take to become job-ready?

With consistent practice and project work, most learners become job-ready within a few months, depending on prior experience.

20. What makes this course different from traditional training?

This course emphasizes hands-on PySpark projects, real-world big data workflows, performance tuning, and career support rather than only theory.